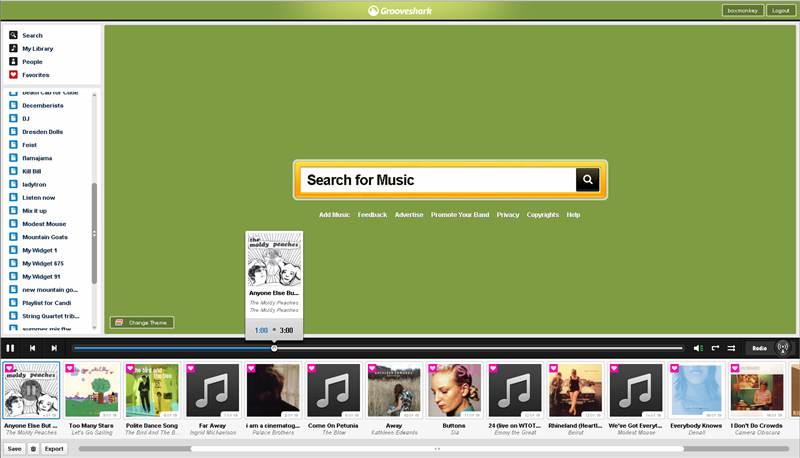

One of the exciting features coming to Grooveshark 2.0 (yes, VIP only at first) starting on the 24th is client-side caching.

What does that mean?

We are finally taking advantage of the fact that because we use flash, we have a stateful application capable of remembering even dynamic data. In other words, if the client already knows something, it doesn’t have to ask the server again.

For our users this is exciting because navigating back and forth on pages they have already seen should be almost instantaneous. For the backend it’s exciting because the client now acts as another layer of cache in front of the database, and can keep data in memory even when it must be flushed from memcache. Example:

When a user edits their playlist, that data is deleted from memcache (not overwritten due to potential race conditions). The next time that playlist data is requested, it must be loaded from the database. In 2.0, the chances of the client asking for that playlist data again are very slim, because in most cases it will remain in memory, so we should still be saved a round trip to the database in most cases.

In the slightly longer term (before Grooveshark 2.0 is available to the public), we will be taking advantage of Flash LSOs to even remember certain data between reloads, so things like browsing your library will be lightning fast and won’t require loading data from the server at all, unless you changed your library from another computer.

Client-side caching is just one of the many ways in which we are working to improve the user experience while hopefully reducing load on our servers, and I will be posting more details over the next few days, so stay tuned.